When it comes to logging solutions you can either choose a managed or an unmanaged solution. If you decide to go with the latter and you are in Google Cloud Platform the recommended option is Stackdriver Logging.

Google offers a custom fluentd based package to send logs to stackdriver (we’ll talk about it in an upcoming post) but if you are only concerned about docker logs and want to avoid installing stuff in the compute instances there is an easier option: gcplogs.

Requirements

To be able to send logs to Stackdriver the first you need to do is to create a service account and assign it to your compute instances. The following permissions must be set on the new service account:

- role/metricWriter

- role/loggingWriter

You might also need to set the following scope in the compute instance or the instance template that should be using the service account:

- scope: cloud-platform

We won’t go over it step-by-step, I’m assuming that you already know how to do that.

Tell docker to use gcplogs driver

WARNING: Using gcplogs driver will prevent you from inspecting logs with the “docker logs” command, you will only be able to access them from Stackdriver Logging.

This driver is already shipped with docker, so you won’t need to install anything besides docker daemon. Depending on how we run the containers the usage of the driver might differ slightly.

Using docker run

docker run --log-driver=gcplogs ...

Using docker-compose

nginx:

image: nginx

container_name: nginx

logging:

driver: gcplogs

ports:

- 80:80

Can I send logs from external sources?

Sure! If you would like to send docker logs to Stackdriver from outside GCP environment (for example, from an AWS instance) you still can do it! You just need to set the environment variables with the credentials and start docker with a few additional parameters.

First, you will need to set the GOOGLE_APPLICATION_CREDENTIALS variable (there are official docs about this: https://docs.docker.com/machine/drivers/gce/). You should be able to use the same service account we created above.

Then you just need to run docker with the following parameters:

docker run --log-driver=gcplogs --log-opt gcp-project=test-project --log-opt gcp-meta-zone=west1 --log-opt gcp-meta-name=`hostname` your/application

Visualizing logs

By default all the logs are grouped by instance. If you have an autoscaler and therefore multiple replicas of the container running you might want to filter the logs based on the container name. To do so you just need to write the desired query:

resource.type="gce_instance" "my_container_name"

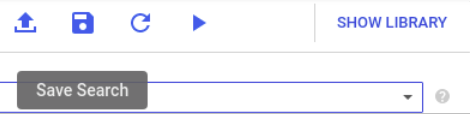

Stackdriver offers you the option to store queries to access them anytime without writing them again. To do so just write your rule and click “Save Search”. Then, you’ll find it under “Show library”:

And if you just want to see ALL the logs sent by docker you can click on the dropdown menu “All logs” and select “docker-gcplogs-driver”. By setting this option you’ll be able to visualize all the logs that were sent using this driver.

To summarize

As you can see it was pretty easy to set everything up. Although it is usually recommended to use stackdriver logging agent in my opinion this is still a valid solution and might be of some use when you don’t want or don’t have time to pack a custom image or install additional stuff in the instances.

The most anoying part is the creation of the service account and setting the correct permissions and scopes, but you would have to deal with that also when using stackdriver logging agent.

I hope this post was of any use to some of you. Do not hesitate to contact us if you would like us to help you with your projects. See you in the next one!